Evolution of Computer Science

From

The following is adapted from the 2005 ACM Computing Curricula Report

Before the 1990s

Undergraduate degree programs in the computing-related disciplines began to emerge in the 1960s. Originally, there were only three kinds of computing-related degree programs in North America: computer science, electrical engineering, and information systems. Each of these disciplines was concerned with its own well-defined area of computing. Because they were the only prominent computing disciplines and because each one had its own area of work and influence, it was much easier for students to determine which kind of degree program to choose. For students who wanted to become expert in developing software or with the theoretical aspects of computing, computer science was the obvious choice. For students who wanted to work with hardware, electrical engineering was the clear option. For students who wanted to use hardware and software to solve business problems, information systems was the right choice.

Each of these three disciplines had its own domain. There was not any shared sense that they constituted a family of computing disciplines. As a practical matter, computer scientists and electrical engineers sometimes worked closely together since they were both concerned with developing new technology, were often housed in the same part of the university, and sometimes required each others’ help. Information systems specialists had ties with business schools and did not have much interaction with computer scientists and electrical engineers.

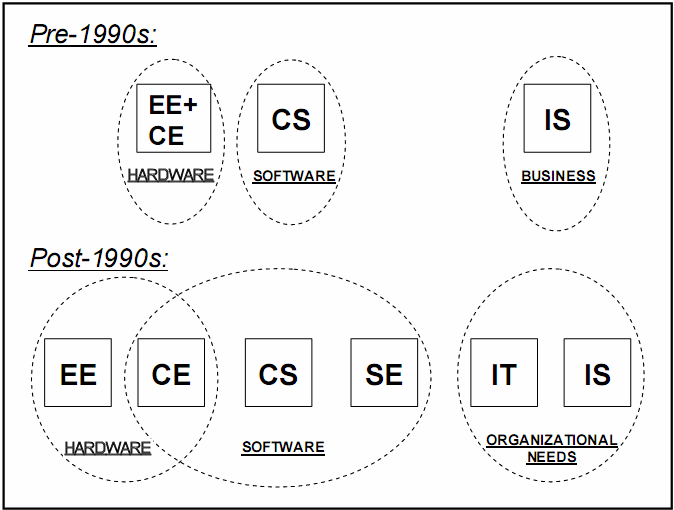

Before the 1990s, the only major change in this landscape in the U.S. was the development of computer engineering. Prior to the invention of chip-based microprocessors, computer engineering was one of several areas of specialization within electrical engineering. With the advent of the microprocessor in the mid-1970s, computer engineering began to emerge from within electrical engineering to become a discipline unto itself. For many people outside of the engineering community, however, the distinction between electrical engineering and computer engineering was not clear. Before the 1990s, therefore, when prospective students surveyed the choices of computing-related degree programs, most would have perceived the computing disciplines as shown in the top half of figure below. The distance between the disciplines indicates how closely the people in those disciplines worked with each other.

Significant Developments of the 1990s

During the 1990s, several developments changed the landscape of the computing disciplines in North America, although in other parts of the world some of these changes occurred earlier.

Computer engineering solidified its emergence from electrical engineering. Computer engineering emerged from electrical engineering during the late 1970s and the 1980s, but it was not until the 1990s that computer chips became basic components of most kinds of electrical devices and many kinds of mechanical devices. (For example, modern automobiles contain numerous computers that perform tasks that are transparent to the driver.) Computer engineers design and program the chips that permit digital control of many kinds of devices. The dramatic expansion in the kinds of devices that rely on chip-based digital logic helped computer engineering solidify its status as a strong field and, during the 1990s, unprecedented numbers of students applied to computer engineering programs. Outside of North America, these programs often had titles such as computer systems engineering.

Computer science grew rapidly and became accepted into the family of academic disciplines. At most American colleges and universities, computer science first appeared as a discipline in the 1970s. Initially, there was considerable controversy about whether computer science was a legitimate academic discipline. Proponents asserted that it was a legitimate discipline with its own identity, while critics dismissed it as a vocational specialty for technicians, a research platform for mathematicians, or a pseudo-discipline for computer programmers. By the 1990s, computer science had developed a considerable body of research, knowledge, and innovation that spanned the range from theory to practice, and the controversy about its legitimacy died. Also during the 1990s, computer science departments faced unprecedented demands. Industry needs for qualified computer science graduates exceeded supply by a large factor. Enrollments in CS programs grew very dramatically. While CS had already experienced cycles of increasing and decreasing enrollments throughout its brief history, the enrollment boom of the 90s was of such magnitude that it seriously stressed the ability of CS departments to handle the very large numbers of students. With increased demands for both teaching and research, the number of CS faculty at many universities grew significantly.

Software engineering had emerged as an area within computer science. As computing is used to attack a wider range of complex problems, creating reliable software becomes more difficult. With large, complex programs, no one person can understand the entire program, and various parts of the program can interact in unpredictable ways. (For example, fixing a bug in one part of a program can create new bugs elsewhere.) Computing is also used in safety-critical tasks where a single bug can cause injury or death. Over time, it became clear that producing good software is very difficult, very expensive, and very necessary. This lead to the creation of software engineering, a term that emanated from a NATO sponsored conference held in Garmisch, Germany in 1968. While computer science (like other sciences) focuses on creating new knowledge, software engineering (like other engineering disciplines) focuses on rigorous methods for designing and building things that reliably do what they’re supposed to do. Major conferences on software engineering were held in the 1970s and, during the 1980s, some computer science degree programs included software engineering courses. However, in the U.S. it was not until the 1990s that one could reasonably expect to find software engineering as a significant component of computer science study at many institutions. Software engineering began to develop as a discipline unto itself. Originally the term software engineering was introduced to reflect the application of traditional ideas from engineering to the problems of building software. As software engineering matured, the scope of its challenge became clearer. In addition to its computer science foundations, software engineering also involves human processes that, by their nature, are harder to formalize than are the logical abstractions of computer science. Experience with software engineering courses within computer science curricula showed many that such courses can teach students about the field of software engineering but usually do not succeed at teaching them how to be software engineers. Many experts concluded that the latter goal requires a range of coursework and applied project experience that goes beyond what can be added to a computer science curriculum. Degree programs in software engineering emerged in the United Kingdom and Australia during the 1980s, but these programs were in the vanguard. In the United States, degree programs in software engineering, designed to provide a more thorough foundation than can be provided within computer science curricula, began to emerge during the 1990s.

Information systems had to address a growing sphere of challenges. Prior to the 1990s, many information systems specialists focused primarily on the computing needs that the business world had faced since the 1960s: accounting systems, payroll systems, inventory systems, etc. By the end of the 1990’s, networked personal computers had become basic commodities. Computers were no longer tools only for technical specialists; they became integral parts of the work environment used by people at all levels of the organization. Because of the expanded role of computers, organizations had more information available than ever before and organizational processes were increasingly enabled by computing technology. The problems of managing information became extremely complex, and the challenges of making proper use of information and technology to support organizational efficiency and effectiveness became crucial issues. Because of these factors, the challenges faced by information systems specialists grew in size, complexity, and importance. In addition, information systems as a field paid increasing attention to the use of computing technology as a means for communication and collaborative decision making in organizations.

Information technology programs began to emerge in the late 1990s. During the 1990s, computers became essential work tools at every level of most organizations, and networked computer systems became the information backbone of organizations. While this improved productivity, it also created new workplace dependencies as problems in the computing infrastructure can limit employees’ ability to do their work. IT departments within corporations and other organizations took on the new job of ensuring that the organization’s computing infrastructure was suitable, that it worked reliably, and that people in the organization had their computing-related needs met, problems solved, etc. By the end of the 1990s, it became clear that academic degree programs were not producing graduates who had the right mix of knowledge and skills to meet these essential needs. College and universities developed degree programs in information technology to fill this crucial void.

Collectively these developments reshaped the landscape of the computing disciplines. Tremendous resources were allocated to information technology activities in all industrialized societies because of various factors, including the explosive growth of the World Wide Web, anticipated Y2K problems, and in Europe, the launch of the Euro.

After the 1990s

The new landscape of computing degree programs reflects the ways in which computing as a whole has matured to address the problems of the new millennium. In the U.S., computer engineering had solidified its status as a discipline distinct from electrical engineering and assumed a primary role with respect to computer hardware and related software. Software engineering has emerged to address the important challenges inherent in building software systems that are reliable and affordable. Information technology has come out of nowhere to fill a void that the other computing disciplines did not adequately address. This maturation and evolution has created a greater range of possibilities for students and educational institutions. The increased diversity of computing programs means that students face choices that are more ambiguous than they were before the 1990s. The bottom portion of the previous figure shows how prospective students might perceive the current range of choices available to them. The dotted ovals show how prospective students are likely to perceive the primary focus of each discipline. It is clear where students who want to study hardware should go. Computer engineering has emerged from electrical engineering as the home for those working on the hardware and software issues involved in the design of digital devices. For those with other interests, the choices are not so clear-cut. In the pre-1990s world, students who wanted to become expert in software development would study computer science. The post-1990s world presents meaningful choices: computer science, software engineering, and even computer engineering each include their own perspective on software development. These three choices imply real differences: for CE, software attention is focused on hardware devices; for SE, the emphasis is on creating software that satisfies robust real-world requirements; and for CS, software is the currency in which ideas are expressed and a wide range of computing problems and applications are explored.

Such distinctions may not be visible to prospective students. Naive students might perceive that all three disciplines share an emphasis on software and are otherwise indistinguishable. Similarly, in the pre-1990s world, a primary area for applying computing to solve real-world problems was in business, and information systems was the home for such work. The scope of real-world uses has broadened from business to organizations of every kind, and students can choose between information systems and information technology programs. While the IT and IS disciplines both include a focus on software and hardware, neither discipline emphasizes them for their own sake; rather, they use technology as critical instruments for addressing organizational needs. While IS focuses on the generation and use of information, and IT focuses on ensuring that the organization’s infrastructure is appropriate and reliable, prospective students might be unaware of these important differences and see only that IS and IT share a purpose in using computing to meet the needs of technology-dependent organizations.